Why the 2016 Election Polls Got It Wrong: The ARF Webinar

“This is the only case study we’ll need for a long time to illustrate why we can’t rely solely on what people say in a survey or a poll.” – Dr. Aaron Reid.

On November 29, The ARF and GreenBook hosted an extremely timely webinar: “Predicting Election 2016: What Worked, What Didn’t and the Implications for Marketing & Insights.” The event, a hybrid virtual/in person meet-up, looked at the marketing and analytics implications of the 2016 election predictive wins and misses.

Like Hillary Clinton’s 90% chance of winning the Presidency. That was a miss.

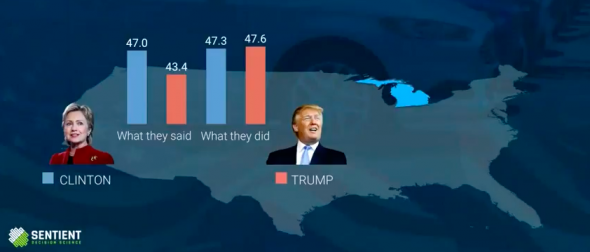

What Voters Said Versus What They Did

Sentient Founder Dr. Aaron Reid, one of the featured presenters, focused on how market researchers can do a better job. His main point: measuring emotional associations can give you important data that can be combined with people’s reason-based survey answers to better predict outcomes—political or otherwise.

The first step, Dr. Reid explained, is to acknowledge that what people are reporting in surveys and polls is different from what they’re actually doing.

Consider some of the election day results in key states.

In Wisconsin, 46.8 percent of people said they’d vote for Clinton and 40.3 percent said they’d vote for Trump. That stands in stark contrast to what people actually did, where 46.9 percent actually voted for Clinton but 47.9 percent (which is well outside the margin of error) voted for Trump.

The polls got it wrong in Michigan as well:

“Now, imagine that was your product,” Dr. Reid said. “You did research and you said in Wisconsin you were going to beat the competition and you didn’t. You did research in Michigan and you said you were going to beat the competition and you didn’t. How many more days do you think you’d be in a job?”

There could be no more powerful case study for why better methods are needed.

Why We Can’t Rely Solely On What People Say

The reason the 2016 election (and so many other) polls got it wrong comes down to the Can’t Say/Won’t Say issue.

Market researchers have known about the Won’t Say problem for years. Consumers won’t tell you the true drivers of their behavior because they’re afraid of social embarrassment. This is common in situations where there’s social stigma around purchase behavior or other behaviors they may be engaging in. Consequently, if a researcher asks questions about the product, service, or brand that evokes embarrassment, consumers may not be willing to admit they’re going to buy it.

“The same may have been true around the social stigma for supporting Trump,” Dr. Reid said. “There was such a bias in social circles against what he was saying and the things he was standing for, there was a potential bias hindering people from admitting [they would vote for him].”

But the bigger problem facing polling and consumer research is the Can’t Say problem.

This issue is born out of insight of the behavioral sciences, which has revealed that consumers often don’t have conscious access to the true drivers of their behavior.

“If they don’t have conscious access to the drivers of their behavior, and yet we ask them explicit questions about what they’re going to do and why they’re going to do it, the data we get out of those questions is necessarily going to be wrong,” Dr. Reid stressed.

How to Get More Accurate Answers

Market researchers need methods that get around the Can’t Say/Won’t say problem. But that doesn’t mean throwing out explicit measures entirely. According to Dr. Reid, integrating implicit techniques with explicit is the way to create a model of decision-making that provides more accurate forecasts.

“The nice thing about this is you’ve got emotional data at the individual respondent level,” he explained. “You can combine it with the reason-based reflective data that comes out of your typical traditional data so you’re capturing both the emotion and reason. What results is quantitative data you can use to predict an outcome.“

The model isn’t wrong, we’re simply adding better data to it.

You can hear about the implicit association studies we did during the primaries, as well as the remarkable difference adding implicit can make in any research, in the full webinar above. Dr. Reid’s piece of the presentation begins at around the 16-minute mark.